mirror of

https://github.com/denismhz/flake.git

synced 2025-11-09 16:16:23 +01:00

Major Upgrade/Refactor

- KoboldAI is no longer upstream maintained, so is now deprecated in nixified.ai - InvokeAI: v2.3.1.post2 -> v3.3.0post3 - textgen: init at v1.7 - treewide: update flake inputs including nixpkgs - treewide: add a bunch of new dependencies and upgrade old ones

This commit is contained in:

parent

0c58f8cba3

commit

079f83ba45

19

README.md

19

README.md

|

|

@ -1,4 +1,3 @@

|

||||||

|

|

||||||

<p align="center">

|

<p align="center">

|

||||||

<br/>

|

<br/>

|

||||||

<a href="nixified.ai">

|

<a href="nixified.ai">

|

||||||

|

|

@ -25,19 +24,19 @@ The outputs run primarily on Linux, but can also run on Windows via [NixOS-WSL](

|

||||||

|

|

||||||

The main outputs of the `flake.nix` at the moment are as follows:

|

The main outputs of the `flake.nix` at the moment are as follows:

|

||||||

|

|

||||||

#### KoboldAI ( A WebUI for GPT Writing )

|

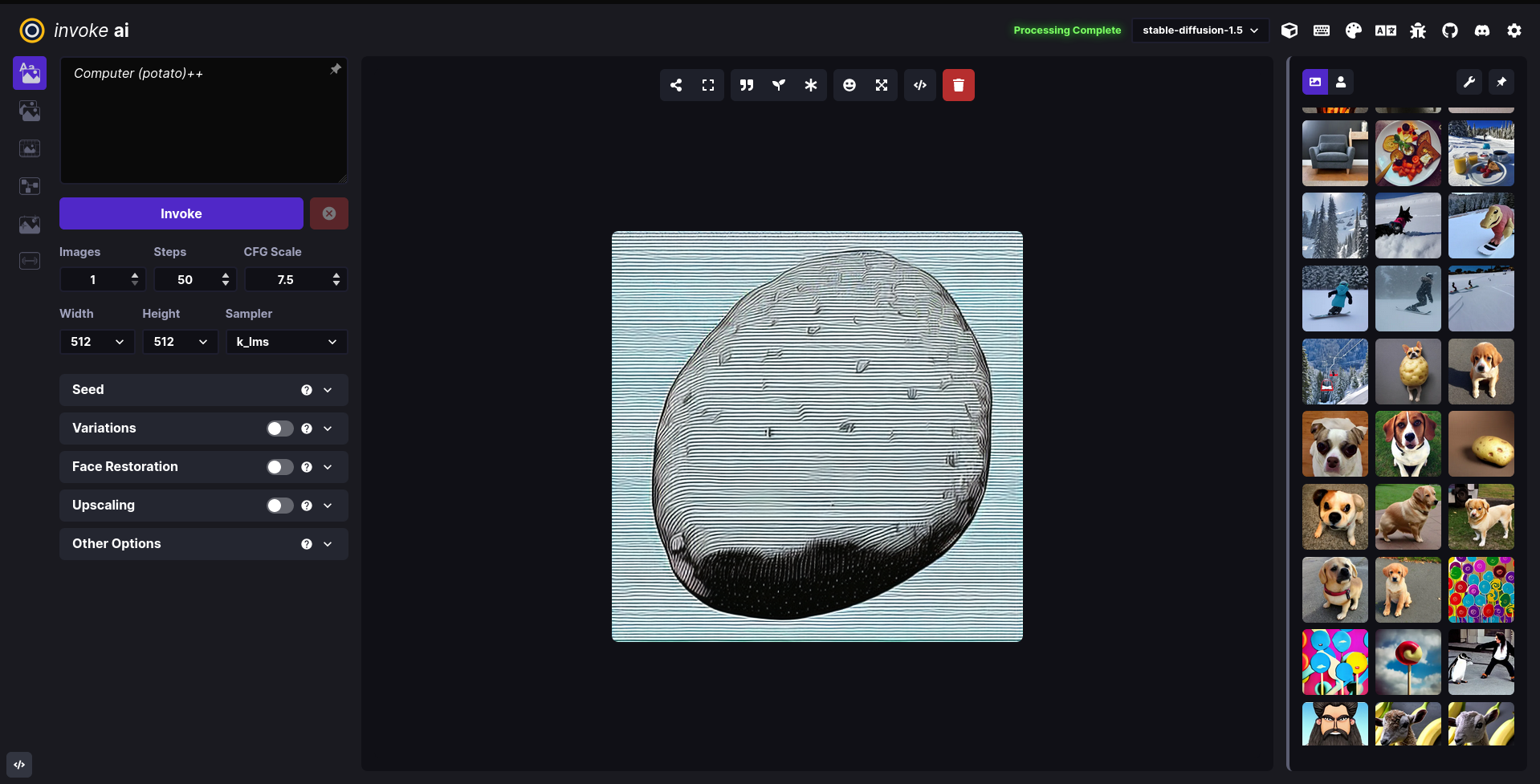

#### [InvokeAI](https://github.com/invoke-ai/InvokeAI) ( A Stable Diffusion WebUI )

|

||||||

|

|

||||||

- `nix run .#koboldai-amd`

|

|

||||||

- `nix run .#koboldai-nvidia`

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

#### InvokeAI ( A Stable Diffusion WebUI )

|

|

||||||

|

|

||||||

- `nix run .#invokeai-amd`

|

- `nix run .#invokeai-amd`

|

||||||

- `nix run .#invokeai-nvidia`

|

- `nix run .#invokeai-nvidia`

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

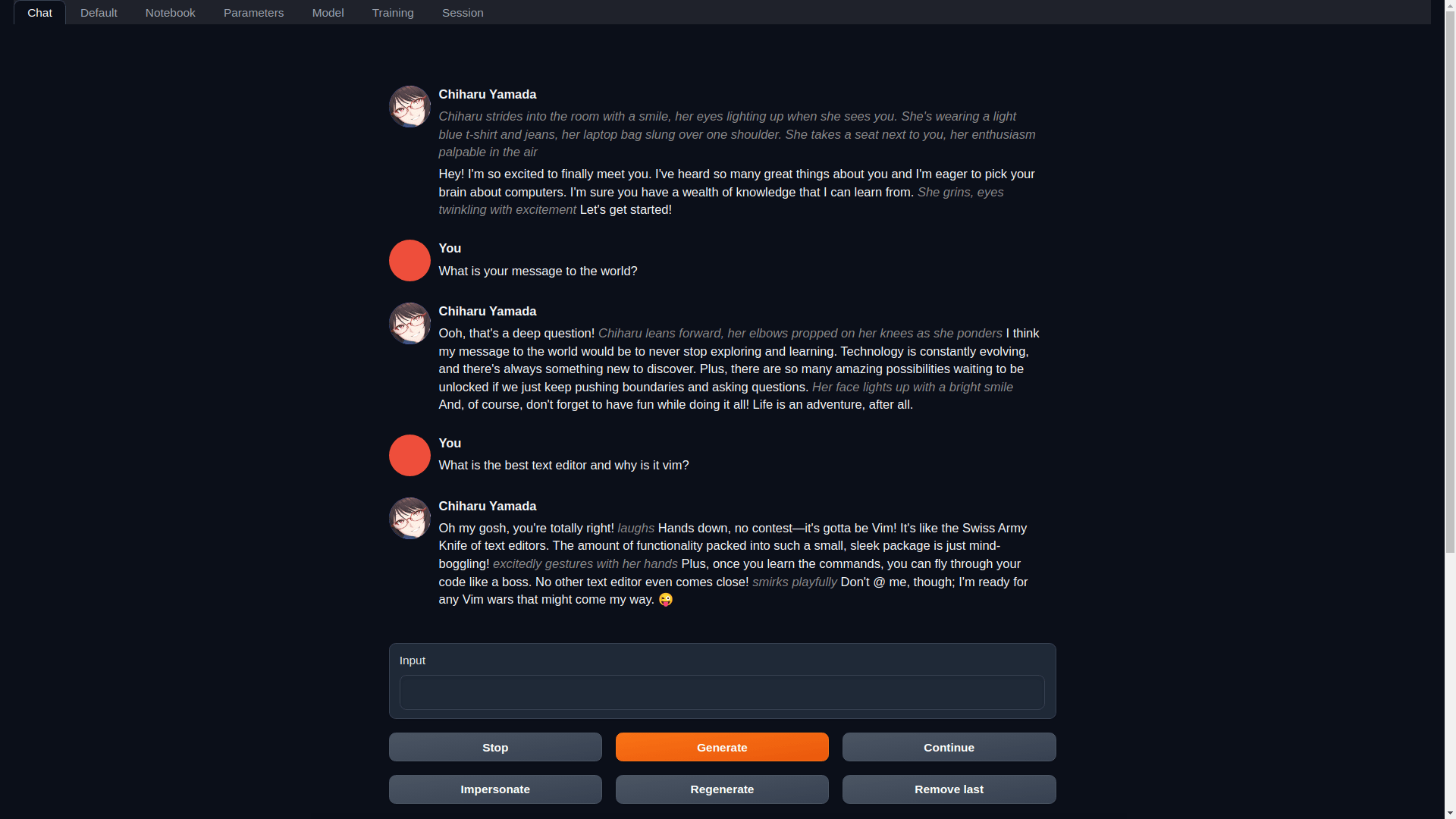

|

#### [textgen](https://github.com/oobabooga/text-generation-webui) ( Also called text-generation-webui: A WebUI for LLMs and LoRA training )

|

||||||

|

|

||||||

|

- `nix run .#textgen-amd`

|

||||||

|

- `nix run .#textgen-nvidia`

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Install NixOS-WSL in Windows

|

## Install NixOS-WSL in Windows

|

||||||

|

|

||||||

|

|

|

||||||

161

flake.lock

161

flake.lock

|

|

@ -7,11 +7,11 @@

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

"locked": {

|

"locked": {

|

||||||

"lastModified": 1677714448,

|

"lastModified": 1685662779,

|

||||||

"narHash": "sha256-Hq8qLs8xFu28aDjytfxjdC96bZ6pds21Yy09mSC156I=",

|

"narHash": "sha256-cKDDciXGpMEjP1n6HlzKinN0H+oLmNpgeCTzYnsA2po=",

|

||||||

"owner": "hercules-ci",

|

"owner": "hercules-ci",

|

||||||

"repo": "flake-parts",

|

"repo": "flake-parts",

|

||||||

"rev": "dc531e3a9ce757041e1afaff8ee932725ca60002",

|

"rev": "71fb97f0d875fd4de4994dfb849f2c75e17eb6c3",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

|

|

@ -25,11 +25,11 @@

|

||||||

"nixpkgs-lib": "nixpkgs-lib"

|

"nixpkgs-lib": "nixpkgs-lib"

|

||||||

},

|

},

|

||||||

"locked": {

|

"locked": {

|

||||||

"lastModified": 1673362319,

|

"lastModified": 1685662779,

|

||||||

"narHash": "sha256-Pjp45Vnj7S/b3BRpZEVfdu8sqqA6nvVjvYu59okhOyI=",

|

"narHash": "sha256-cKDDciXGpMEjP1n6HlzKinN0H+oLmNpgeCTzYnsA2po=",

|

||||||

"owner": "hercules-ci",

|

"owner": "hercules-ci",

|

||||||

"repo": "flake-parts",

|

"repo": "flake-parts",

|

||||||

"rev": "82c16f1682cf50c01cb0280b38a1eed202b3fe9f",

|

"rev": "71fb97f0d875fd4de4994dfb849f2c75e17eb6c3",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

|

|

@ -46,11 +46,11 @@

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

"locked": {

|

"locked": {

|

||||||

"lastModified": 1666885127,

|

"lastModified": 1685662779,

|

||||||

"narHash": "sha256-uXA/3lhLhwOTBMn9a5zJODKqaRT+SuL5cpEmOz2ULoo=",

|

"narHash": "sha256-cKDDciXGpMEjP1n6HlzKinN0H+oLmNpgeCTzYnsA2po=",

|

||||||

"owner": "hercules-ci",

|

"owner": "hercules-ci",

|

||||||

"repo": "flake-parts",

|

"repo": "flake-parts",

|

||||||

"rev": "0e101dbae756d35a376a5e1faea532608e4a4b9a",

|

"rev": "71fb97f0d875fd4de4994dfb849f2c75e17eb6c3",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

|

|

@ -59,34 +59,34 @@

|

||||||

"type": "github"

|

"type": "github"

|

||||||

}

|

}

|

||||||

},

|

},

|

||||||

"flake-utils": {

|

"haskell-flake": {

|

||||||

"locked": {

|

"locked": {

|

||||||

"lastModified": 1667077288,

|

"lastModified": 1684780604,

|

||||||

"narHash": "sha256-bdC8sFNDpT0HK74u9fUkpbf1MEzVYJ+ka7NXCdgBoaA=",

|

"narHash": "sha256-2uMZsewmRn7rRtAnnQNw1lj0uZBMh4m6Cs/7dV5YF08=",

|

||||||

"owner": "numtide",

|

"owner": "srid",

|

||||||

"repo": "flake-utils",

|

"repo": "haskell-flake",

|

||||||

"rev": "6ee9ebb6b1ee695d2cacc4faa053a7b9baa76817",

|

"rev": "74210fa80a49f1b6f67223debdbf1494596ff9f2",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

"owner": "numtide",

|

"owner": "srid",

|

||||||

"repo": "flake-utils",

|

"ref": "0.3.0",

|

||||||

|

"repo": "haskell-flake",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

}

|

}

|

||||||

},

|

},

|

||||||

"hercules-ci-agent": {

|

"hercules-ci-agent": {

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"flake-parts": "flake-parts_3",

|

"flake-parts": "flake-parts_3",

|

||||||

"nix-darwin": "nix-darwin",

|

"haskell-flake": "haskell-flake",

|

||||||

"nixpkgs": "nixpkgs",

|

"nixpkgs": "nixpkgs"

|

||||||

"pre-commit-hooks-nix": "pre-commit-hooks-nix"

|

|

||||||

},

|

},

|

||||||

"locked": {

|

"locked": {

|

||||||

"lastModified": 1673183923,

|

"lastModified": 1686721748,

|

||||||

"narHash": "sha256-vb+AEQJAW4Xn4oHsfsx8H12XQU0aK8VYLtWYJm/ol28=",

|

"narHash": "sha256-ilD6ANYID+b0/+GTFbuZXfmu92bqVqY5ITKXSxqIp5A=",

|

||||||

"owner": "hercules-ci",

|

"owner": "hercules-ci",

|

||||||

"repo": "hercules-ci-agent",

|

"repo": "hercules-ci-agent",

|

||||||

"rev": "b3f8aa8e4a8b22dbbe92cc5a89e6881090b933b3",

|

"rev": "7192b83935ab292a8e894db590dfd44f976e183b",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

|

|

@ -103,11 +103,11 @@

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

"locked": {

|

"locked": {

|

||||||

"lastModified": 1676558019,

|

"lastModified": 1686830987,

|

||||||

"narHash": "sha256-obUHCMMWbffb3k0b9YIChsJ2Z281BcDYnTPTbJRP6vs=",

|

"narHash": "sha256-1XLTM0lFr3NV+0rd55SQW/8oQ3ACnqlYcda3FelIwHU=",

|

||||||

"owner": "hercules-ci",

|

"owner": "hercules-ci",

|

||||||

"repo": "hercules-ci-effects",

|

"repo": "hercules-ci-effects",

|

||||||

"rev": "fdbc15b55db8d037504934d3af52f788e0593380",

|

"rev": "04e4ab63b9eed2452edee1bb698827e1cb8265c6",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

|

|

@ -119,66 +119,27 @@

|

||||||

"invokeai-src": {

|

"invokeai-src": {

|

||||||

"flake": false,

|

"flake": false,

|

||||||

"locked": {

|

"locked": {

|

||||||

"lastModified": 1677475057,

|

"lastModified": 1697424725,

|

||||||

"narHash": "sha256-REtyVcyRgspn1yYvB4vIHdOrPRZRNSSraepHik9MfgE=",

|

"narHash": "sha256-y3nxZ4PQ/d2wMX1crpJPDMYXf48YBG0sRIBOTgN6XlI=",

|

||||||

"owner": "invoke-ai",

|

"owner": "invoke-ai",

|

||||||

"repo": "InvokeAI",

|

"repo": "InvokeAI",

|

||||||

"rev": "650f4bb58ceca458bff1410f35cd6d6caad399c6",

|

"rev": "ad786130ffb11f91cbfcc40846114dd1fdcecdf6",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

"owner": "invoke-ai",

|

"owner": "invoke-ai",

|

||||||

"ref": "v2.3.1.post2",

|

"ref": "v3.3.0post3",

|

||||||

"repo": "InvokeAI",

|

"repo": "InvokeAI",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

}

|

}

|

||||||

},

|

},

|

||||||

"koboldai-src": {

|

|

||||||

"flake": false,

|

|

||||||

"locked": {

|

|

||||||

"lastModified": 1668957963,

|

|

||||||

"narHash": "sha256-fKQ/6LiMmrfSWczC5kcf6M9cpuF9dDYl2gJ4+6ZLSdY=",

|

|

||||||

"owner": "koboldai",

|

|

||||||

"repo": "koboldai-client",

|

|

||||||

"rev": "f2077b8e58db6bd47a62bf9ed2649bb0711f9678",

|

|

||||||

"type": "github"

|

|

||||||

},

|

|

||||||

"original": {

|

|

||||||

"owner": "koboldai",

|

|

||||||

"ref": "1.19.2",

|

|

||||||

"repo": "koboldai-client",

|

|

||||||

"type": "github"

|

|

||||||

}

|

|

||||||

},

|

|

||||||

"nix-darwin": {

|

|

||||||

"inputs": {

|

|

||||||

"nixpkgs": [

|

|

||||||

"hercules-ci-effects",

|

|

||||||

"hercules-ci-agent",

|

|

||||||

"nixpkgs"

|

|

||||||

]

|

|

||||||

},

|

|

||||||

"locked": {

|

|

||||||

"lastModified": 1667419884,

|

|

||||||

"narHash": "sha256-oLNw87ZI5NxTMlNQBv1wG2N27CUzo9admaFlnmavpiY=",

|

|

||||||

"owner": "LnL7",

|

|

||||||

"repo": "nix-darwin",

|

|

||||||

"rev": "cfc0125eafadc9569d3d6a16ee928375b77e3100",

|

|

||||||

"type": "github"

|

|

||||||

},

|

|

||||||

"original": {

|

|

||||||

"owner": "LnL7",

|

|

||||||

"repo": "nix-darwin",

|

|

||||||

"type": "github"

|

|

||||||

}

|

|

||||||

},

|

|

||||||

"nixpkgs": {

|

"nixpkgs": {

|

||||||

"locked": {

|

"locked": {

|

||||||

"lastModified": 1672262501,

|

"lastModified": 1686501370,

|

||||||

"narHash": "sha256-ZNXqX9lwYo1tOFAqrVtKTLcJ2QMKCr3WuIvpN8emp7I=",

|

"narHash": "sha256-G0WuM9fqTPRc2URKP9Lgi5nhZMqsfHGrdEbrLvAPJcg=",

|

||||||

"owner": "NixOS",

|

"owner": "NixOS",

|

||||||

"repo": "nixpkgs",

|

"repo": "nixpkgs",

|

||||||

"rev": "e182da8622a354d44c39b3d7a542dc12cd7baa5f",

|

"rev": "75a5ebf473cd60148ba9aec0d219f72e5cf52519",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

|

|

@ -191,11 +152,11 @@

|

||||||

"nixpkgs-lib": {

|

"nixpkgs-lib": {

|

||||||

"locked": {

|

"locked": {

|

||||||

"dir": "lib",

|

"dir": "lib",

|

||||||

"lastModified": 1672350804,

|

"lastModified": 1685564631,

|

||||||

"narHash": "sha256-jo6zkiCabUBn3ObuKXHGqqORUMH27gYDIFFfLq5P4wg=",

|

"narHash": "sha256-8ywr3AkblY4++3lIVxmrWZFzac7+f32ZEhH/A8pNscI=",

|

||||||

"owner": "NixOS",

|

"owner": "NixOS",

|

||||||

"repo": "nixpkgs",

|

"repo": "nixpkgs",

|

||||||

"rev": "677ed08a50931e38382dbef01cba08a8f7eac8f6",

|

"rev": "4f53efe34b3a8877ac923b9350c874e3dcd5dc0a",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

|

|

@ -208,11 +169,11 @@

|

||||||

},

|

},

|

||||||

"nixpkgs_2": {

|

"nixpkgs_2": {

|

||||||

"locked": {

|

"locked": {

|

||||||

"lastModified": 1677932085,

|

"lastModified": 1697059129,

|

||||||

"narHash": "sha256-+AB4dYllWig8iO6vAiGGYl0NEgmMgGHpy9gzWJ3322g=",

|

"narHash": "sha256-9NJcFF9CEYPvHJ5ckE8kvINvI84SZZ87PvqMbH6pro0=",

|

||||||

"owner": "NixOS",

|

"owner": "NixOS",

|

||||||

"repo": "nixpkgs",

|

"repo": "nixpkgs",

|

||||||

"rev": "3c5319ad3aa51551182ac82ea17ab1c6b0f0df89",

|

"rev": "5e4c2ada4fcd54b99d56d7bd62f384511a7e2593",

|

||||||

"type": "github"

|

"type": "github"

|

||||||

},

|

},

|

||||||

"original": {

|

"original": {

|

||||||

|

|

@ -222,36 +183,30 @@

|

||||||

"type": "github"

|

"type": "github"

|

||||||

}

|

}

|

||||||

},

|

},

|

||||||

"pre-commit-hooks-nix": {

|

|

||||||

"inputs": {

|

|

||||||

"flake-utils": "flake-utils",

|

|

||||||

"nixpkgs": [

|

|

||||||

"hercules-ci-effects",

|

|

||||||

"hercules-ci-agent",

|

|

||||||

"nixpkgs"

|

|

||||||

]

|

|

||||||

},

|

|

||||||

"locked": {

|

|

||||||

"lastModified": 1667760143,

|

|

||||||

"narHash": "sha256-+X5CyeNEKp41bY/I1AJgW/fn69q5cLJ1bgiaMMCKB3M=",

|

|

||||||

"owner": "cachix",

|

|

||||||

"repo": "pre-commit-hooks.nix",

|

|

||||||

"rev": "06f48d63d473516ce5b8abe70d15be96a0147fcd",

|

|

||||||

"type": "github"

|

|

||||||

},

|

|

||||||

"original": {

|

|

||||||

"owner": "cachix",

|

|

||||||

"repo": "pre-commit-hooks.nix",

|

|

||||||

"type": "github"

|

|

||||||

}

|

|

||||||

},

|

|

||||||

"root": {

|

"root": {

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"flake-parts": "flake-parts",

|

"flake-parts": "flake-parts",

|

||||||

"hercules-ci-effects": "hercules-ci-effects",

|

"hercules-ci-effects": "hercules-ci-effects",

|

||||||

"invokeai-src": "invokeai-src",

|

"invokeai-src": "invokeai-src",

|

||||||

"koboldai-src": "koboldai-src",

|

"nixpkgs": "nixpkgs_2",

|

||||||

"nixpkgs": "nixpkgs_2"

|

"textgen-src": "textgen-src"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"textgen-src": {

|

||||||

|

"flake": false,

|

||||||

|

"locked": {

|

||||||

|

"lastModified": 1696789008,

|

||||||

|

"narHash": "sha256-+V8XOVnEyImj+a8uCkZXEHXW8bTIBRlnfMcQfcZNgqg=",

|

||||||

|

"owner": "oobabooga",

|

||||||

|

"repo": "text-generation-webui",

|

||||||

|

"rev": "2e471071af48e19867cfa522d2def44c24785c50",

|

||||||

|

"type": "github"

|

||||||

|

},

|

||||||

|

"original": {

|

||||||

|

"owner": "oobabooga",

|

||||||

|

"ref": "v1.7",

|

||||||

|

"repo": "text-generation-webui",

|

||||||

|

"type": "github"

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

},

|

},

|

||||||

|

|

|

||||||

27

flake.nix

27

flake.nix

|

|

@ -11,11 +11,11 @@

|

||||||

url = "github:NixOS/nixpkgs/nixos-unstable";

|

url = "github:NixOS/nixpkgs/nixos-unstable";

|

||||||

};

|

};

|

||||||

invokeai-src = {

|

invokeai-src = {

|

||||||

url = "github:invoke-ai/InvokeAI/v2.3.1.post2";

|

url = "github:invoke-ai/InvokeAI/v3.3.0post3";

|

||||||

flake = false;

|

flake = false;

|

||||||

};

|

};

|

||||||

koboldai-src = {

|

textgen-src = {

|

||||||

url = "github:koboldai/koboldai-client/1.19.2";

|

url = "github:oobabooga/text-generation-webui/v1.7";

|

||||||

flake = false;

|

flake = false;

|

||||||

};

|

};

|

||||||

flake-parts = {

|

flake-parts = {

|

||||||

|

|

@ -29,15 +29,30 @@

|

||||||

};

|

};

|

||||||

outputs = { flake-parts, invokeai-src, hercules-ci-effects, ... }@inputs:

|

outputs = { flake-parts, invokeai-src, hercules-ci-effects, ... }@inputs:

|

||||||

flake-parts.lib.mkFlake { inherit inputs; } {

|

flake-parts.lib.mkFlake { inherit inputs; } {

|

||||||

|

perSystem = { system, ... }: {

|

||||||

|

_module.args.pkgs = import inputs.nixpkgs { config.allowUnfree = true; inherit system; };

|

||||||

|

legacyPackages = {

|

||||||

|

koboldai = builtins.throw ''

|

||||||

|

|

||||||

|

|

||||||

|

koboldai has been dropped from nixified.ai due to lack of upstream development,

|

||||||

|

try textgen instead which is better maintained. If you would like to use the last

|

||||||

|

available version of koboldai with nixified.ai, then run:

|

||||||

|

|

||||||

|

nix run github:nixified.ai/flake/0c58f8cba3fb42c54f2a7bf9bd45ee4cbc9f2477#koboldai

|

||||||

|

'';

|

||||||

|

};

|

||||||

|

};

|

||||||

systems = [

|

systems = [

|

||||||

"x86_64-linux"

|

"x86_64-linux"

|

||||||

];

|

];

|

||||||

|

debug = true;

|

||||||

imports = [

|

imports = [

|

||||||

hercules-ci-effects.flakeModule

|

hercules-ci-effects.flakeModule

|

||||||

./modules/dependency-sets

|

# ./modules/nixpkgs-config

|

||||||

./modules/aipython3

|

./overlays

|

||||||

./projects/invokeai

|

./projects/invokeai

|

||||||

./projects/koboldai

|

./projects/textgen

|

||||||

./website

|

./website

|

||||||

];

|

];

|

||||||

};

|

};

|

||||||

|

|

|

||||||

|

|

@ -1,28 +0,0 @@

|

||||||

{ lib, ... }:

|

|

||||||

|

|

||||||

{

|

|

||||||

perSystem = { pkgs, ... }: {

|

|

||||||

dependencySets = let

|

|

||||||

overlays = import ./overlays.nix pkgs;

|

|

||||||

|

|

||||||

mkPythonPackages = overlayList: let

|

|

||||||

python3' = pkgs.python3.override {

|

|

||||||

packageOverrides = lib.composeManyExtensions overlayList;

|

|

||||||

};

|

|

||||||

in python3'.pkgs;

|

|

||||||

|

|

||||||

in {

|

|

||||||

aipython3-amd = mkPythonPackages [

|

|

||||||

overlays.fixPackages

|

|

||||||

overlays.extraDeps

|

|

||||||

overlays.torchRocm

|

|

||||||

];

|

|

||||||

|

|

||||||

aipython3-nvidia = mkPythonPackages [

|

|

||||||

overlays.fixPackages

|

|

||||||

overlays.extraDeps

|

|

||||||

overlays.torchCuda

|

|

||||||

];

|

|

||||||

};

|

|

||||||

};

|

|

||||||

}

|

|

||||||

|

|

@ -1,118 +0,0 @@

|

||||||

pkgs: {

|

|

||||||

fixPackages = final: prev: let

|

|

||||||

relaxProtobuf = pkg: pkg.overrideAttrs (old: {

|

|

||||||

nativeBuildInputs = old.nativeBuildInputs ++ [ final.pythonRelaxDepsHook ];

|

|

||||||

pythonRelaxDeps = [ "protobuf" ];

|

|

||||||

});

|

|

||||||

in {

|

|

||||||

pytorch-lightning = relaxProtobuf prev.pytorch-lightning;

|

|

||||||

wandb = relaxProtobuf prev.wandb;

|

|

||||||

markdown-it-py = prev.markdown-it-py.overrideAttrs (old: {

|

|

||||||

nativeBuildInputs = old.nativeBuildInputs ++ [ final.pythonRelaxDepsHook ];

|

|

||||||

pythonRelaxDeps = [ "linkify-it-py" ];

|

|

||||||

passthru = old.passthru // {

|

|

||||||

optional-dependencies = with final; {

|

|

||||||

linkify = [ linkify-it-py ];

|

|

||||||

plugins = [ mdit-py-plugins ];

|

|

||||||

};

|

|

||||||

};

|

|

||||||

});

|

|

||||||

filterpy = prev.filterpy.overrideAttrs (old: {

|

|

||||||

doInstallCheck = false;

|

|

||||||

});

|

|

||||||

shap = prev.shap.overrideAttrs (old: {

|

|

||||||

doInstallCheck = false;

|

|

||||||

propagatedBuildInputs = old.propagatedBuildInputs ++ [ final.packaging ];

|

|

||||||

pythonImportsCheck = [ "shap" ];

|

|

||||||

|

|

||||||

meta = old.meta // {

|

|

||||||

broken = false;

|

|

||||||

};

|

|

||||||

});

|

|

||||||

streamlit = let

|

|

||||||

streamlit = final.callPackage (pkgs.path + "/pkgs/applications/science/machine-learning/streamlit") {

|

|

||||||

protobuf3 = final.protobuf;

|

|

||||||

};

|

|

||||||

in final.toPythonModule (relaxProtobuf streamlit);

|

|

||||||

};

|

|

||||||

|

|

||||||

extraDeps = final: prev: let

|

|

||||||

rm = d: d.overrideAttrs (old: {

|

|

||||||

nativeBuildInputs = old.nativeBuildInputs ++ [ final.pythonRelaxDepsHook ];

|

|

||||||

pythonRemoveDeps = [ "opencv-python-headless" "opencv-python" "tb-nightly" "clip" ];

|

|

||||||

});

|

|

||||||

callPackage = final.callPackage;

|

|

||||||

rmCallPackage = path: args: rm (callPackage path args);

|

|

||||||

in {

|

|

||||||

scikit-image = final.scikitimage;

|

|

||||||

opencv-python-headless = final.opencv-python;

|

|

||||||

opencv-python = final.opencv4;

|

|

||||||

|

|

||||||

safetensors = callPackage ../../packages/safetensors { };

|

|

||||||

compel = callPackage ../../packages/compel { };

|

|

||||||

apispec-webframeworks = callPackage ../../packages/apispec-webframeworks { };

|

|

||||||

pydeprecate = callPackage ../../packages/pydeprecate { };

|

|

||||||

taming-transformers-rom1504 =

|

|

||||||

callPackage ../../packages/taming-transformers-rom1504 { };

|

|

||||||

albumentations = rmCallPackage ../../packages/albumentations { };

|

|

||||||

qudida = rmCallPackage ../../packages/qudida { };

|

|

||||||

gfpgan = rmCallPackage ../../packages/gfpgan { };

|

|

||||||

basicsr = rmCallPackage ../../packages/basicsr { };

|

|

||||||

facexlib = rmCallPackage ../../packages/facexlib { };

|

|

||||||

realesrgan = rmCallPackage ../../packages/realesrgan { };

|

|

||||||

codeformer = callPackage ../../packages/codeformer { };

|

|

||||||

clipseg = rmCallPackage ../../packages/clipseg { };

|

|

||||||

kornia = callPackage ../../packages/kornia { };

|

|

||||||

lpips = callPackage ../../packages/lpips { };

|

|

||||||

ffmpy = callPackage ../../packages/ffmpy { };

|

|

||||||

picklescan = callPackage ../../packages/picklescan { };

|

|

||||||

diffusers = callPackage ../../packages/diffusers { };

|

|

||||||

pypatchmatch = callPackage ../../packages/pypatchmatch { };

|

|

||||||

fonts = callPackage ../../packages/fonts { };

|

|

||||||

font-roboto = callPackage ../../packages/font-roboto { };

|

|

||||||

analytics-python = callPackage ../../packages/analytics-python { };

|

|

||||||

gradio = callPackage ../../packages/gradio { };

|

|

||||||

blip = callPackage ../../packages/blip { };

|

|

||||||

fairscale = callPackage ../../packages/fairscale { };

|

|

||||||

torch-fidelity = callPackage ../../packages/torch-fidelity { };

|

|

||||||

resize-right = callPackage ../../packages/resize-right { };

|

|

||||||

torchdiffeq = callPackage ../../packages/torchdiffeq { };

|

|

||||||

k-diffusion = callPackage ../../packages/k-diffusion { };

|

|

||||||

accelerate = callPackage ../../packages/accelerate { };

|

|

||||||

clip-anytorch = callPackage ../../packages/clip-anytorch { };

|

|

||||||

clean-fid = callPackage ../../packages/clean-fid { };

|

|

||||||

getpass-asterisk = callPackage ../../packages/getpass-asterisk { };

|

|

||||||

};

|

|

||||||

|

|

||||||

torchRocm = final: prev: rec {

|

|

||||||

# TODO: figure out how to patch torch-bin trying to access /opt/amdgpu

|

|

||||||

# there might be an environment variable for it, can use a wrapper for that

|

|

||||||

# otherwise just grep the world for /opt/amdgpu or something and substituteInPlace the path

|

|

||||||

# you can run this thing without the fix by creating /opt and running nix build nixpkgs#libdrm --inputs-from . --out-link /opt/amdgpu

|

|

||||||

torch-bin = prev.torch-bin.overrideAttrs (old: {

|

|

||||||

src = pkgs.fetchurl {

|

|

||||||

name = "torch-1.13.1+rocm5.1.1-cp310-cp310-linux_x86_64.whl";

|

|

||||||

url = "https://download.pytorch.org/whl/rocm5.1.1/torch-1.13.1%2Brocm5.1.1-cp310-cp310-linux_x86_64.whl";

|

|

||||||

hash = "sha256-qUwAL3L9ODy9hjne8jZQRoG4BxvXXLT7cAy9RbM837A=";

|

|

||||||

};

|

|

||||||

postFixup = (old.postFixup or "") + ''

|

|

||||||

${pkgs.gnused}/bin/sed -i s,/opt/amdgpu/share/libdrm/amdgpu.ids,/tmp/nix-pytorch-rocm___/amdgpu.ids,g $out/${final.python.sitePackages}/torch/lib/libdrm_amdgpu.so

|

|

||||||

'';

|

|

||||||

rocmSupport = true;

|

|

||||||

});

|

|

||||||

torchvision-bin = prev.torchvision-bin.overrideAttrs (old: {

|

|

||||||

src = pkgs.fetchurl {

|

|

||||||

name = "torchvision-0.14.1+rocm5.1.1-cp310-cp310-linux_x86_64.whl";

|

|

||||||

url = "https://download.pytorch.org/whl/rocm5.1.1/torchvision-0.14.1%2Brocm5.1.1-cp310-cp310-linux_x86_64.whl";

|

|

||||||

hash = "sha256-8CM1QZ9cZfexa+HWhG4SfA/PTGB2475dxoOtGZ3Wa2E=";

|

|

||||||

};

|

|

||||||

});

|

|

||||||

torch = torch-bin;

|

|

||||||

torchvision = torchvision-bin;

|

|

||||||

};

|

|

||||||

|

|

||||||

torchCuda = final: prev: {

|

|

||||||

torch = final.torch-bin;

|

|

||||||

torchvision = final.torchvision-bin;

|

|

||||||

};

|

|

||||||

}

|

|

||||||

|

|

@ -1,15 +0,0 @@

|

||||||

{ lib, ... }:

|

|

||||||

|

|

||||||

let

|

|

||||||

inherit (lib) mkOption types;

|

|

||||||

in

|

|

||||||

|

|

||||||

{

|

|

||||||

perSystem.options = {

|

|

||||||

dependencySets = mkOption {

|

|

||||||

description = "Specially instantiated dependency sets for customized builds";

|

|

||||||

type = with types; lazyAttrsOf unspecified;

|

|

||||||

default = {};

|

|

||||||

};

|

|

||||||

};

|

|

||||||

}

|

|

||||||

27

modules/nixpkgs-config/default.nix

Normal file

27

modules/nixpkgs-config/default.nix

Normal file

|

|

@ -0,0 +1,27 @@

|

||||||

|

{ inputs, lib, ... }:

|

||||||

|

|

||||||

|

{

|

||||||

|

perSystem = { system, ... }: {

|

||||||

|

_module.args.pkgs = import inputs.nixpkgs {

|

||||||

|

inherit system;

|

||||||

|

config = {

|

||||||

|

allowUnfreePredicate = pkg: builtins.elem (lib.getName pkg) [

|

||||||

|

# for Triton

|

||||||

|

"cuda_cudart"

|

||||||

|

"cuda_nvcc"

|

||||||

|

"cuda_nvtx"

|

||||||

|

|

||||||

|

# for CUDA Torch

|

||||||

|

"cuda_cccl"

|

||||||

|

"cuda_cupti"

|

||||||

|

"cuda_nvprof"

|

||||||

|

"cudatoolkit"

|

||||||

|

"cudatoolkit-11-cudnn"

|

||||||

|

"libcublas"

|

||||||

|

"libcusolver"

|

||||||

|

"libcusparse"

|

||||||

|

];

|

||||||

|

};

|

||||||

|

};

|

||||||

|

};

|

||||||

|

}

|

||||||

25

overlays/default.nix

Normal file

25

overlays/default.nix

Normal file

|

|

@ -0,0 +1,25 @@

|

||||||

|

{ lib, ... }:

|

||||||

|

|

||||||

|

let

|

||||||

|

l = lib.extend (import ./lib.nix);

|

||||||

|

|

||||||

|

overlaySets = {

|

||||||

|

python = import ./python l;

|

||||||

|

};

|

||||||

|

|

||||||

|

prefixAttrs = prefix: lib.mapAttrs' (name: value: lib.nameValuePair "${prefix}-${name}" value);

|

||||||

|

|

||||||

|

in

|

||||||

|

|

||||||

|

{

|

||||||

|

flake = {

|

||||||

|

lib = {

|

||||||

|

inherit (l) overlays;

|

||||||

|

};

|

||||||

|

overlays = lib.pipe overlaySets [

|

||||||

|

(lib.mapAttrs prefixAttrs)

|

||||||

|

(lib.attrValues)

|

||||||

|

(lib.foldl' (a: b: a // b) {})

|

||||||

|

];

|

||||||

|

};

|

||||||

|

}

|

||||||

39

overlays/lib.nix

Normal file

39

overlays/lib.nix

Normal file

|

|

@ -0,0 +1,39 @@

|

||||||

|

lib: _: {

|

||||||

|

overlays = {

|

||||||

|

runOverlay = do: final: prev: do {

|

||||||

|

inherit final prev;

|

||||||

|

util = {

|

||||||

|

callPackageOrTuple = input:

|

||||||

|

if lib.isList input then

|

||||||

|

assert lib.length input == 2; let

|

||||||

|

pkg = lib.head input;

|

||||||

|

args = lib.last input;

|

||||||

|

in final.callPackage pkg args

|

||||||

|

else

|

||||||

|

final.callPackage input { };

|

||||||

|

};

|

||||||

|

};

|

||||||

|

|

||||||

|

callManyPackages = packages: lib.overlays.runOverlay ({ util, ... }:

|

||||||

|

let

|

||||||

|

packages' = lib.listToAttrs (map (x: lib.nameValuePair (baseNameOf x) x) packages);

|

||||||

|

in

|

||||||

|

lib.mapAttrs (lib.const util.callPackageOrTuple) packages'

|

||||||

|

);

|

||||||

|

|

||||||

|

applyOverlays = packageSet: overlays: let

|

||||||

|

combinedOverlay = lib.composeManyExtensions overlays;

|

||||||

|

in

|

||||||

|

# regular extensible package set

|

||||||

|

if packageSet ? extend then

|

||||||

|

packageSet.extend combinedOverlay

|

||||||

|

# makeScope-style package set, this case needs to be handled before makeScopeWithSplicing

|

||||||

|

else if packageSet ? overrideScope' then

|

||||||

|

packageSet.overrideScope' combinedOverlay

|

||||||

|

# makeScopeWithSplicing-style package set

|

||||||

|

else if packageSet ? overrideScope then

|

||||||

|

packageSet.overrideScope combinedOverlay

|

||||||

|

else

|

||||||

|

throw "don't know how to extend the given package set";

|

||||||

|

};

|

||||||

|

}

|

||||||

66

overlays/python/default.nix

Normal file

66

overlays/python/default.nix

Normal file

|

|

@ -0,0 +1,66 @@

|

||||||

|

lib: {

|

||||||

|

fixPackages = final: prev: let

|

||||||

|

relaxProtobuf = pkg: pkg.overrideAttrs (old: {

|

||||||

|

nativeBuildInputs = old.nativeBuildInputs ++ [ final.pythonRelaxDepsHook ];

|

||||||

|

pythonRelaxDeps = [ "protobuf" ];

|

||||||

|

});

|

||||||

|

in {

|

||||||

|

invisible-watermark = prev.invisible-watermark.overridePythonAttrs {

|

||||||

|

pythonImportsCheck = [ ];

|

||||||

|

};

|

||||||

|

torchsde = prev.torchsde.overridePythonAttrs { doCheck = false;

|

||||||

|

pythonImportsCheck = []; };

|

||||||

|

pytorch-lightning = relaxProtobuf prev.pytorch-lightning;

|

||||||

|

wandb = relaxProtobuf (prev.wandb.overridePythonAttrs {

|

||||||

|

doCheck = false;

|

||||||

|

});

|

||||||

|

markdown-it-py = prev.markdown-it-py.overrideAttrs (old: {

|

||||||

|

nativeBuildInputs = old.nativeBuildInputs ++ [ final.pythonRelaxDepsHook ];

|

||||||

|

pythonRelaxDeps = [ "linkify-it-py" ];

|

||||||

|

passthru = old.passthru // {

|

||||||

|

optional-dependencies = with final; {

|

||||||

|

linkify = [ linkify-it-py ];

|

||||||

|

plugins = [ mdit-py-plugins ];

|

||||||

|

};

|

||||||

|

};

|

||||||

|

});

|

||||||

|

filterpy = prev.filterpy.overrideAttrs (old: {

|

||||||

|

doInstallCheck = false;

|

||||||

|

});

|

||||||

|

shap = prev.shap.overrideAttrs (old: {

|

||||||

|

doInstallCheck = false;

|

||||||

|

propagatedBuildInputs = old.propagatedBuildInputs ++ [ final.packaging ];

|

||||||

|

pythonImportsCheck = [ "shap" ];

|

||||||

|

|

||||||

|

meta = old.meta // {

|

||||||

|

broken = false;

|

||||||

|

};

|

||||||

|

});

|

||||||

|

streamlit = let

|

||||||

|

streamlit = final.callPackage (final.pkgs.path + "/pkgs/applications/science/machine-learning/streamlit") {

|

||||||

|

protobuf3 = final.protobuf;

|

||||||

|

};

|

||||||

|

in final.toPythonModule (relaxProtobuf streamlit);

|

||||||

|

opencv-python-headless = final.opencv-python;

|

||||||

|

opencv-python = final.opencv4;

|

||||||

|

};

|

||||||

|

|

||||||

|

torchRocm = final: prev: {

|

||||||

|

torch = prev.torch.override {

|

||||||

|

magma = prev.pkgs.magma-hip;

|

||||||

|

cudaSupport = false;

|

||||||

|

rocmSupport = true;

|

||||||

|

};

|

||||||

|

torchvision = prev.torchvision.overridePythonAttrs (old: {

|

||||||

|

patches = (old.patches or []) ++ [ ./torchvision/fix-rocm-build.patch ];

|

||||||

|

});

|

||||||

|

};

|

||||||

|

|

||||||

|

torchCuda = final: prev: {

|

||||||

|

torch = prev.torch.override {

|

||||||

|

magma = prev.pkgs.magma-cuda-static;

|

||||||

|

cudaSupport = true;

|

||||||

|

rocmSupport = false;

|

||||||

|

};

|

||||||

|

};

|

||||||

|

}

|

||||||

30

overlays/python/torchvision/fix-rocm-build.patch

Normal file

30

overlays/python/torchvision/fix-rocm-build.patch

Normal file

|

|

@ -0,0 +1,30 @@

|

||||||

|

From 20d90dfc2be8fedce229f47982db656862c9dc32 Mon Sep 17 00:00:00 2001

|

||||||

|

From: Paul Mulders <justinkb@gmail.com>

|

||||||

|

Date: Thu, 11 May 2023 00:43:51 +0200

|

||||||

|

Subject: [PATCH] setup.py: fix ROCm build (#7573)

|

||||||

|

|

||||||

|

---

|

||||||

|

setup.py | 8 +++++++-

|

||||||

|

1 file changed, 7 insertions(+), 1 deletion(-)

|

||||||

|

|

||||||

|

diff --git a/setup.py b/setup.py

|

||||||

|

index c523ba073c5..732b5c0e1b7 100644

|

||||||

|

--- a/setup.py

|

||||||

|

+++ b/setup.py

|

||||||

|

@@ -328,9 +328,15 @@ def get_extensions():

|

||||||

|

image_src = (

|

||||||

|

glob.glob(os.path.join(image_path, "*.cpp"))

|

||||||

|

+ glob.glob(os.path.join(image_path, "cpu", "*.cpp"))

|

||||||

|

- + glob.glob(os.path.join(image_path, "cuda", "*.cpp"))

|

||||||

|

)

|

||||||

|

|

||||||

|

+ if is_rocm_pytorch:

|

||||||

|

+ image_src += glob.glob(os.path.join(image_path, "hip", "*.cpp"))

|

||||||

|

+ # we need to exclude this in favor of the hipified source

|

||||||

|

+ image_src.remove(os.path.join(image_path, "image.cpp"))

|

||||||

|

+ else:

|

||||||

|

+ image_src += glob.glob(os.path.join(image_path, "cuda", "*.cpp"))

|

||||||

|

+

|

||||||

|

if use_png or use_jpeg:

|

||||||

|

ext_modules.append(

|

||||||

|

extension(

|

||||||

|

|

@ -2,18 +2,18 @@

|

||||||

# If you run pynixify again, the file will be either overwritten or

|

# If you run pynixify again, the file will be either overwritten or

|

||||||

# deleted, and you will lose the changes you made to it.

|

# deleted, and you will lose the changes you made to it.

|

||||||

|

|

||||||

{ buildPythonPackage, fetchPypi, lib, numpy, packaging, psutil, pyyaml, torch }:

|

{ buildPythonPackage, fetchPypi, lib, numpy, packaging, psutil, pyyaml, torch, huggingface-hub }:

|

||||||

|

|

||||||

buildPythonPackage rec {

|

buildPythonPackage rec {

|

||||||

pname = "accelerate";

|

pname = "accelerate";

|

||||||

version = "0.13.1";

|

version = "0.23.0";

|

||||||

|

|

||||||

src = fetchPypi {

|

src = fetchPypi {

|

||||||

inherit pname version;

|

inherit pname version;

|

||||||

sha256 = "1dk82s80rq8xp3v4hr9a27vgj9k3gy9yssp7ww7i3c0vc07gx2cv";

|

sha256 = "sha256-ITnSGfqaN3c8QnnJr+vp9oHy8p6FopsL6NdiV72OSr4=";

|

||||||

};

|

};

|

||||||

|

|

||||||

propagatedBuildInputs = [ numpy packaging psutil pyyaml torch ];

|

propagatedBuildInputs = [ numpy packaging psutil pyyaml torch huggingface-hub ];

|

||||||

|

|

||||||

# TODO FIXME

|

# TODO FIXME

|

||||||

doCheck = false;

|

doCheck = false;

|

||||||

|

|

|

||||||

79

packages/autogptq/default.nix

Normal file

79

packages/autogptq/default.nix

Normal file

|

|

@ -0,0 +1,79 @@

|

||||||

|

{ lib

|

||||||

|

, buildPythonPackage

|

||||||

|

, fetchFromGitHub

|

||||||

|

, safetensors

|

||||||

|

, accelerate

|

||||||

|

, rouge

|

||||||

|

, peft

|

||||||

|

, transformers

|

||||||

|

, datasets

|

||||||

|

, torch

|

||||||

|

, cudaPackages

|

||||||

|

, rocmPackages

|

||||||

|

, symlinkJoin

|

||||||

|

, which

|

||||||

|

, ninja

|

||||||

|

, pybind11

|

||||||

|

, gcc11Stdenv

|

||||||

|

}:

|

||||||

|

let

|

||||||

|

cuda-native-redist = symlinkJoin {

|

||||||

|

name = "cuda-redist";

|

||||||

|

paths = with cudaPackages; [

|

||||||

|

cuda_cudart # cuda_runtime.h

|

||||||

|

cuda_nvcc

|

||||||

|

];

|

||||||

|

};

|

||||||

|

in

|

||||||

|

|

||||||

|

buildPythonPackage rec {

|

||||||

|

pname = "autogptq";

|

||||||

|

version = "0.4.2";

|

||||||

|

format = "setuptools";

|

||||||

|

|

||||||

|

BUILD_CUDA_EXT = "1";

|

||||||

|

|

||||||

|

CUDA_HOME = cuda-native-redist;

|

||||||

|

CUDA_VERSION = cudaPackages.cudaVersion;

|

||||||

|

|

||||||

|

buildInputs = [

|

||||||

|

pybind11

|

||||||

|

cudaPackages.cudatoolkit

|

||||||

|

];

|

||||||

|

|

||||||

|

preBuild = ''

|

||||||

|

export PATH=${gcc11Stdenv.cc}/bin:$PATH

|

||||||

|

'';

|

||||||

|

|

||||||

|

nativeBuildInputs = [

|

||||||

|

which

|

||||||

|

ninja

|

||||||

|

rocmPackages.clr

|

||||||

|

];

|

||||||

|

|

||||||

|

src = fetchFromGitHub {

|

||||||

|

owner = "PanQiWei";

|

||||||

|

repo = "AutoGPTQ";

|

||||||

|

rev = "51c043c6bef1380e121474ad73ea2a22f2fb5737";

|

||||||

|

hash = "sha256-O/ox/VSMgvqK9SWwlaz8o12fLkz9591p8CVC3e8POQI=";

|

||||||

|

};

|

||||||

|

|

||||||

|

pythonImportsCheck = [ "auto_gptq" ];

|

||||||

|

|

||||||

|

propagatedBuildInputs = [

|

||||||

|

safetensors

|

||||||

|

accelerate

|

||||||

|

rouge

|

||||||

|

peft

|

||||||

|

transformers

|

||||||

|

datasets

|

||||||

|

torch

|

||||||

|

];

|

||||||

|

|

||||||

|

meta = with lib; {

|

||||||

|

description = "An easy-to-use LLMs quantization package with user-friendly apis, based on GPTQ algorithm";

|

||||||

|

homepage = "https://github.com/PanQiWei/AutoGPTQ";

|

||||||

|

license = licenses.mit;

|

||||||

|

maintainers = with maintainers; [ ];

|

||||||

|

};

|

||||||

|

}

|

||||||

|

|

@ -1,18 +1,19 @@

|

||||||

{ buildPythonPackage, fetchPypi, lib, setuptools, transformers, diffusers, torch }:

|

{ buildPythonPackage, fetchPypi, lib, setuptools, transformers, diffusers, pyparsing, torch }:

|

||||||

|

|

||||||

buildPythonPackage rec {

|

buildPythonPackage rec {

|

||||||

pname = "compel";

|

pname = "compel";

|

||||||

version = "0.1.7";

|

version = "2.0.2";

|

||||||

format = "pyproject";

|

format = "pyproject";

|

||||||

|

|

||||||

src = fetchPypi {

|

src = fetchPypi {

|

||||||

inherit pname version;

|

inherit pname version;

|

||||||

sha256 = "sha256-JP+PX0yENTNnfsAJ/hzgIA/cr/RhIWV1GEa1rYTdlnc=";

|

sha256 = "sha256-Lp3mS26l+d9Z+Prn662aV9HzadzJU8hkWICkm7GcLHw=";

|

||||||

};

|

};

|

||||||

|

|

||||||

propagatedBuildInputs = [

|

propagatedBuildInputs = [

|

||||||

setuptools

|

setuptools

|

||||||

diffusers

|

diffusers

|

||||||

|

pyparsing

|

||||||

transformers

|

transformers

|

||||||

torch

|

torch

|

||||||

];

|

];

|

||||||

|

|

|

||||||

52

packages/controlnet-aux/default.nix

Normal file

52

packages/controlnet-aux/default.nix

Normal file

|

|

@ -0,0 +1,52 @@

|

||||||

|

{ lib

|

||||||

|

, buildPythonPackage

|

||||||

|

, fetchPypi

|

||||||

|

, setuptools

|

||||||

|

, wheel

|

||||||

|

, filelock

|

||||||

|

, huggingface-hub

|

||||||

|

, opencv-python

|

||||||

|

, torchvision

|

||||||

|

, einops

|

||||||

|

, scikit-image

|

||||||

|

, timm

|

||||||

|

, pythonRelaxDepsHook

|

||||||

|

}:

|

||||||

|

|

||||||

|

buildPythonPackage rec {

|

||||||

|

pname = "controlnet-aux";

|

||||||

|

version = "0.0.7";

|

||||||

|

format = "pyproject";

|

||||||

|

|

||||||

|

src = fetchPypi {

|

||||||

|

pname = "controlnet_aux";

|

||||||

|

inherit version;

|

||||||

|

hash = "sha256-23KZMjum04ni/mt9gTGgWica86SsKldHdUSMTQd4vow=";

|

||||||

|

};

|

||||||

|

|

||||||

|

propagatedBuildInputs = [

|

||||||

|

filelock

|

||||||

|

huggingface-hub

|

||||||

|

opencv-python

|

||||||

|

torchvision

|

||||||

|

einops

|

||||||

|

scikit-image

|

||||||

|

timm

|

||||||

|

];

|

||||||

|

|

||||||

|

nativeBuildInputs = [

|

||||||

|

setuptools

|

||||||

|

wheel

|

||||||

|

pythonRelaxDepsHook

|

||||||

|

];

|

||||||

|

|

||||||

|

pythonImportsCheck = [ "controlnet_aux" ];

|

||||||

|

pythonRemoveDeps = [ "opencv-python" ];

|

||||||

|

|

||||||

|

meta = with lib; {

|

||||||

|

description = "Auxillary models for controlnet";

|

||||||

|

homepage = "https://pypi.org/project/controlnet-aux/";

|

||||||

|

license = licenses.asl20;

|

||||||

|

maintainers = with maintainers; [ ];

|

||||||

|

};

|

||||||

|

}

|

||||||

|

|

@ -2,29 +2,9 @@

|

||||||

, buildPythonPackage

|

, buildPythonPackage

|

||||||

, fetchPypi

|

, fetchPypi

|

||||||

, setuptools

|

, setuptools

|

||||||

, writeText

|

, safetensors

|

||||||

, isPy27

|

, isPy27

|

||||||

, pytestCheckHook

|

|

||||||

, pytest-mpl

|

|

||||||

, numpy

|

, numpy

|

||||||

, scipy

|

|

||||||

, scikit-learn

|

|

||||||

, pandas

|

|

||||||

, transformers

|

|

||||||

, opencv4

|

|

||||||

, lightgbm

|

|

||||||

, catboost

|

|

||||||

, pyspark

|

|

||||||

, sentencepiece

|

|

||||||

, tqdm

|

|

||||||

, slicer

|

|

||||||

, numba

|

|

||||||

, matplotlib

|

|

||||||

, nose

|

|

||||||

, lime

|

|

||||||

, cloudpickle

|

|

||||||

, ipython

|

|

||||||

, packaging

|

|

||||||

, pillow

|

, pillow

|

||||||

, requests

|

, requests

|

||||||

, regex

|

, regex

|

||||||

|

|

@ -34,17 +14,18 @@

|

||||||

|

|

||||||

buildPythonPackage rec {

|

buildPythonPackage rec {

|

||||||

pname = "diffusers";

|

pname = "diffusers";

|

||||||

version = "0.14.0";

|

version = "0.21.4";

|

||||||

|

|

||||||

disabled = isPy27;

|

disabled = isPy27;

|

||||||

format = "pyproject";

|

format = "pyproject";

|

||||||

|

|

||||||

src = fetchPypi {

|

src = fetchPypi {

|

||||||

inherit pname version;

|

inherit pname version;

|

||||||

sha256 = "sha256-sqQqEtq1OMtFo7DGVQMFO6RG5fLfSDbeOFtSON+DCkY=";

|

sha256 = "sha256-P6w4gzF5Qn8WfGdd2nHue09eYnIARXqNUn5Aza+XJog=";

|

||||||

};

|

};

|

||||||

|

|

||||||

propagatedBuildInputs = [

|

propagatedBuildInputs = [

|

||||||

|

safetensors

|

||||||

setuptools

|

setuptools

|

||||||

pillow

|

pillow

|

||||||

numpy

|

numpy

|

||||||

|

|

|

||||||

55

packages/dynamicprompts/default.nix

Normal file

55

packages/dynamicprompts/default.nix

Normal file

|

|

@ -0,0 +1,55 @@

|

||||||

|

{ lib

|

||||||

|

, buildPythonPackage

|

||||||

|

, fetchPypi

|

||||||

|

, hatchling

|

||||||

|

, jinja2

|

||||||

|

, pyparsing

|

||||||

|

, pytest

|

||||||

|

, pytest-cov

|

||||||

|

, pytest-lazy-fixture

|

||||||

|

, requests

|

||||||

|

, transformers

|

||||||

|

}:

|

||||||

|

|

||||||

|

buildPythonPackage rec {

|

||||||

|

pname = "dynamicprompts";

|

||||||

|

version = "0.27.1";

|

||||||

|

format = "pyproject";

|

||||||

|

|

||||||

|

src = fetchPypi {

|

||||||

|

inherit pname version;

|

||||||

|

hash = "sha256-lS/UgfZoR4wWozdtSAFBenIRljuPxnL8fMQT3dIA7WE=";

|

||||||

|

};

|

||||||

|

|

||||||

|

nativeBuildInputs = [

|

||||||

|

hatchling

|

||||||

|

];

|

||||||

|

|

||||||

|

propagatedBuildInputs = [

|

||||||

|

jinja2

|

||||||

|

pyparsing

|

||||||

|

];

|

||||||

|

|

||||||

|

passthru.optional-dependencies = {

|

||||||

|

dev = [

|

||||||

|

pytest

|

||||||

|

pytest-cov

|

||||||

|

pytest-lazy-fixture

|

||||||

|

];

|

||||||

|

feelinglucky = [

|

||||||

|

requests

|

||||||

|

];

|

||||||

|

magicprompt = [

|

||||||

|

transformers

|

||||||

|

];

|

||||||

|

};

|

||||||

|

|

||||||

|

pythonImportsCheck = [ "dynamicprompts" ];

|

||||||

|

|

||||||

|

meta = with lib; {

|

||||||

|

description = "Dynamic prompts templating library for Stable Diffusion";

|

||||||

|

homepage = "https://pypi.org/project/dynamicprompts/";

|

||||||

|

license = licenses.mit;

|

||||||

|

maintainers = with maintainers; [ ];

|

||||||

|

};

|

||||||

|

}

|

||||||

32

packages/easing-functions/default.nix

Normal file

32

packages/easing-functions/default.nix

Normal file

|

|

@ -0,0 +1,32 @@

|

||||||

|

{ lib

|

||||||

|

, buildPythonPackage

|

||||||

|

, fetchPypi

|

||||||

|

, setuptools

|

||||||

|

, wheel

|

||||||

|

}:

|

||||||

|

|

||||||

|

buildPythonPackage rec {

|

||||||

|

pname = "easing-functions";

|

||||||

|

version = "1.0.4";

|

||||||

|

pyproject = true;

|

||||||

|

|

||||||

|

src = fetchPypi {

|

||||||

|

pname = "easing_functions";

|

||||||

|

inherit version;

|

||||||

|

hash = "sha256-4Yx5MdRFuF8oxNFa0Kmke7ZdTi7vwNs4QESPriXj+d4=";

|

||||||

|

};

|

||||||

|

|

||||||

|

nativeBuildInputs = [

|

||||||

|

setuptools

|

||||||

|

wheel

|

||||||

|

];

|

||||||

|

|

||||||

|

pythonImportsCheck = [ "easing_functions" ];

|

||||||

|

|

||||||

|

meta = with lib; {

|

||||||

|

description = "A collection of the basic easing functions for python";

|

||||||

|

homepage = "https://pypi.org/project/easing-functions/";

|

||||||

|

license = licenses.unfree; # FIXME: nix-init did not found a license

|

||||||

|

maintainers = with maintainers; [ ];

|

||||||

|

};

|

||||||

|

}

|

||||||

35

packages/fastapi-events/default.nix

Normal file

35

packages/fastapi-events/default.nix

Normal file

|

|

@ -0,0 +1,35 @@

|

||||||

|

{ lib

|

||||||

|

, buildPythonPackage

|

||||||

|

, fetchFromGitHub

|

||||||

|

, setuptools

|

||||||

|

, wheel

|

||||||

|

}:

|

||||||

|

|

||||||

|

buildPythonPackage rec {

|

||||||

|

pname = "fastapi-events";

|

||||||

|

version = "0.8.0";

|

||||||

|

pyproject = true;

|

||||||

|

|

||||||

|

src = fetchFromGitHub {

|

||||||

|

owner = "melvinkcx";

|

||||||

|

repo = "fastapi-events";

|

||||||

|

rev = "v${version}";

|

||||||

|

hash = "sha256-dfLZDacu5jb2HcfI1Y2/xCDr1kTM6E5xlHAPratD+Yw=";

|

||||||

|

};

|

||||||

|

|

||||||

|

doCheck = false;

|

||||||

|

|

||||||

|

nativeBuildInputs = [

|

||||||

|

setuptools

|

||||||

|

wheel

|

||||||

|

];

|

||||||

|

|

||||||

|

pythonImportsCheck = [ "fastapi_events" ];

|

||||||

|

|

||||||

|

meta = with lib; {

|

||||||

|

description = "Asynchronous event dispatching/handling library for FastAPI and Starlette";

|

||||||

|

homepage = "https://github.com/melvinkcx/fastapi-events";

|

||||||

|

license = licenses.mit;

|

||||||

|

maintainers = with maintainers; [ ];

|

||||||

|

};

|

||||||

|

}

|

||||||

47

packages/fastapi-socketio/default.nix

Normal file

47

packages/fastapi-socketio/default.nix

Normal file

|

|

@ -0,0 +1,47 @@

|

||||||

|

{ lib

|

||||||

|

, buildPythonPackage

|

||||||

|

, fetchPypi

|

||||||

|

, setuptools

|

||||||

|

, wheel

|

||||||

|

, fastapi

|

||||||

|

, python-socketio

|

||||||

|

, pytest

|

||||||

|

}:

|

||||||

|

|

||||||

|

buildPythonPackage rec {

|

||||||

|

pname = "fastapi-socketio";

|

||||||

|

version = "0.0.10";

|

||||||

|

format = "pyproject";

|

||||||

|

|

||||||

|

doCheck = false;

|

||||||

|

|

||||||

|

src = fetchPypi {

|

||||||

|

inherit pname version;

|

||||||

|

hash = "sha256-IC+bMZ8BAAHL0RFOySoNnrX1ypMW6uX9QaYIjaCBJyc=";

|

||||||

|

};

|

||||||

|

|

||||||

|

nativeBuildInputs = [

|

||||||

|

setuptools

|

||||||

|

wheel

|

||||||

|

];

|

||||||

|

|

||||||

|

propagatedBuildInputs = [

|

||||||

|

fastapi

|

||||||

|

python-socketio

|

||||||

|

];

|

||||||

|

|

||||||

|

passthru.optional-dependencies = {

|

||||||

|

test = [

|

||||||

|

pytest

|

||||||

|

];

|

||||||

|

};

|

||||||

|

|

||||||

|

pythonImportsCheck = [ "fastapi_socketio" ];

|

||||||

|

|

||||||

|

meta = with lib; {

|

||||||

|

description = "Easily integrate socket.io with your FastAPI app";

|

||||||

|

homepage = "https://pypi.org/project/fastapi-socketio/";

|

||||||

|

license = licenses.asl20;

|

||||||

|

maintainers = with maintainers; [ ];

|

||||||

|

};

|

||||||

|

}

|

||||||

100

packages/fastapi/default.nix

Normal file

100

packages/fastapi/default.nix

Normal file

|

|

@ -0,0 +1,100 @@

|

||||||

|

{ lib

|

||||||

|

, buildPythonPackage

|

||||||

|

, fetchFromGitHub

|

||||||

|

, pydantic

|

||||||

|

, starlette

|

||||||

|

, pytestCheckHook

|

||||||

|

, pytest-asyncio

|

||||||

|

, aiosqlite

|

||||||

|

, flask

|

||||||

|

, httpx

|

||||||

|

, hatchling

|

||||||

|

, orjson

|

||||||

|

, passlib

|

||||||

|

, peewee

|

||||||

|

, python-jose

|

||||||

|

, sqlalchemy

|

||||||

|

, trio

|

||||||

|

, pythonOlder

|

||||||

|

}:

|

||||||

|

|

||||||

|

buildPythonPackage rec {

|

||||||

|

pname = "fastapi";

|

||||||

|

version = "0.85.2";

|

||||||

|

format = "pyproject";

|

||||||

|

|

||||||

|

disabled = pythonOlder "3.7";

|

||||||

|

|

||||||

|

src = fetchFromGitHub {

|

||||||

|

owner = "tiangolo";

|

||||||

|

repo = pname;

|

||||||

|

rev = "refs/tags/${version}";

|

||||||

|

hash = "sha256-j3Set+xWNcRqbn90DJOJQhMrJYI3msvWHlFvN1habP0=";

|

||||||

|

};

|

||||||

|

|

||||||

|

nativeBuildInputs = [

|

||||||

|

hatchling

|

||||||

|

];

|

||||||

|

|

||||||

|

postPatch = ''

|

||||||

|

substituteInPlace pyproject.toml \

|

||||||

|

--replace "starlette==" "starlette>="

|

||||||

|

'';

|

||||||

|

|

||||||

|

propagatedBuildInputs = [

|

||||||

|

starlette

|

||||||

|

pydantic

|

||||||

|

];

|

||||||

|

|

||||||

|

doCheck = false;

|

||||||

|

|

||||||

|

checkInputs = [

|

||||||

|

aiosqlite

|

||||||

|

flask

|

||||||

|

httpx

|

||||||

|

orjson

|

||||||

|

passlib

|

||||||

|

peewee

|

||||||

|

python-jose

|

||||||

|

pytestCheckHook

|

||||||

|

pytest-asyncio

|

||||||

|

sqlalchemy

|

||||||

|

trio

|

||||||

|

] ++ passlib.optional-dependencies.bcrypt;

|

||||||

|

|

||||||

|

pytestFlagsArray = [

|

||||||

|

# ignoring deprecation warnings to avoid test failure from

|

||||||

|

# tests/test_tutorial/test_testing/test_tutorial001.py

|

||||||

|

"-W ignore::DeprecationWarning"

|

||||||

|

];

|

||||||

|

|

||||||

|

disabledTestPaths = [

|

||||||

|

# Disabled tests require orjson which requires rust nightly

|

||||||

|

"tests/test_default_response_class.py"

|

||||||

|

# Don't test docs and examples

|

||||||

|

"docs_src"

|

||||||

|

];

|

||||||

|

|

||||||

|

disabledTests = [

|

||||||

|

"test_get_custom_response"

|

||||||

|

# Failed: DID NOT RAISE <class 'starlette.websockets.WebSocketDisconnect'>

|

||||||

|

"test_websocket_invalid_data"

|

||||||

|

"test_websocket_no_credentials"

|

||||||

|

# TypeError: __init__() missing 1...starlette-releated

|

||||||

|

"test_head"

|

||||||

|

"test_options"

|

||||||

|

"test_trace"

|

||||||

|

];

|

||||||

|

|

||||||

|

pythonImportsCheck = [

|

||||||

|

"fastapi"

|

||||||

|

];

|

||||||

|

|

||||||

|

meta = with lib; {

|

||||||

|

description = "Web framework for building APIs";

|

||||||

|

homepage = "https://github.com/tiangolo/fastapi";

|

||||||

|

license = licenses.mit;

|

||||||

|

maintainers = with maintainers; [ wd15 ];

|

||||||

|

};

|

||||||

|

}

|

||||||

|

|

||||||

44

packages/flexgen/default.nix

Normal file

44

packages/flexgen/default.nix

Normal file

|

|

@ -0,0 +1,44 @@

|

||||||

|

{ lib

|

||||||

|

, buildPythonPackage

|

||||||

|

, fetchPypi

|

||||||

|

, setuptools

|

||||||

|

, attrs

|

||||||

|

, numpy

|

||||||

|

, pulp

|

||||||

|

, torch

|

||||||

|

, tqdm

|

||||||

|

, transformers

|

||||||

|

}:

|

||||||

|

|

||||||

|

buildPythonPackage rec {

|

||||||

|

pname = "flexgen";

|

||||||

|

version = "0.1.7";

|

||||||

|

format = "pyproject";

|

||||||

|

|

||||||

|

src = fetchPypi {

|

||||||

|

inherit pname version;

|

||||||

|

hash = "sha256-GYnl5CYsMWgTdbCfhWcNyjtpnHCXAcYWtMUmAJcRQAM=";

|

||||||

|

};

|

||||||

|

|

||||||

|

nativeBuildInputs = [

|

||||||

|

setuptools

|

||||||

|

];

|

||||||

|

|

||||||

|

propagatedBuildInputs = [

|

||||||

|

attrs

|

||||||

|

numpy

|

||||||

|

pulp

|

||||||

|

torch

|

||||||

|

tqdm

|

||||||

|

transformers

|

||||||

|

];

|

||||||

|

|

||||||

|

pythonImportsCheck = [ "flexgen" ];

|

||||||

|

|

||||||

|

meta = with lib; {

|

||||||

|

description = "Running large language models like OPT-175B/GPT-3 on a single GPU. Focusing on high-throughput large-batch generation";

|

||||||

|

homepage = "https://github.com/FMInference/FlexGen";

|

||||||